It was going to be a lonely trip back

The term "GPU" was released in 1994 by Sony for the 32-bit GPU designed by Toshiba for the PS one video game console.

Dean Prok

Chief Information Security Officer

1990s

NVIDIA RIVA 128 was one of the first consumer-facing GPU integrated 3D processing unit and 2D processing unit on a chip. 3D accelerator cards moved beyond being simple rasterizers to add another significant hardware stage to the 3D rendering pipeline. The Nvidia GeForce 256 (also known as NV10) was the first consumer-level card with hardware-accelerated T&L

The term, graphics processing unit, was popularized in 1999 when Nvidia marketed its GeForce 256 with the capabilities of graphics transformation, lighting, and triangle clipping. These are math-heavy computations, which ultimately help render three-dimensional spaces. The engineering is tailored towards these actions, which allows processes to be increasingly optimized and accelerated. Performing millions of computations or using floating point values creates repetition. This is the perfect scenario for tasks to be run in parallel.

2000s

Nvidia was first to produce a chip capable of programmable shading: the GeForce 3. Used in the Xbox console, this chip competed with the one in the PS 2, which used a custom vector unit for hardware accelerated vertex processing. With the introduction of the Nvidia GeForce 8 series and new generic stream processing units, GPUs became more generalized computing devices.

Parallel GPUs are making computational inroads against the CPU, and a subfield of research, dubbed GPU computing or GPGPU for general purpose computing on GPU, has found applications in fields as diverse as machine learning, oil exploration, scientific image processing, linear algebra, statistics, 3D reconstruction, and stock options pricing.

GPGPU was the precursor to what is now called a compute shader (e.g. CUDA, OpenCL, DirectCompute) and actually abused the hardware to a degree by treating the data passed to algorithms as texture maps and executing algorithms by drawing a triangle or quad with an appropriate pixel shader.

Nvidia's CUDA platform, first introduced in 2007, was the earliest widely adopted programming model for GPU computing. OpenCL is an open standard defined by the Khronos Group that allows for the development of code for both GPUs and CPUs with an emphasis on portability. OpenCL solutions are supported by Intel, AMD, Nvidia, and ARM, and according to a report in 2011 by Evans Data, OpenCL had become the second most popular HPC tool.

2010s

In 2010, Nvidia partnered with Audi to power their cars' dashboards, using the Tegra GPU to provide increased functionality to cars' navigation and entertainment systems.

The Kepler line of graphics cards by Nvidia were released in 2012 and were used in the Nvidia's 600 and 700 series cards. A feature in this GPU microarchitecture included GPU boost, a technology that adjusts the clock-speed of a video card to increase or decrease it according to its power draw. The Kepler microarchitecture was manufactured on the 28 nm process.

The PS4 and Xbox One were released in 2013; they both use GPUs based on AMD's Radeon HD 7850 and 7790. Nvidia's Kepler line of GPUs was followed by the Maxwell line, manufactured on the same process.

Nvidia's 28 nm chips were manufactured by TSMC in Taiwan using the 28 nm process. Compared to the 40 nm technology from the past, this manufacturing process allowed a 20 percent boost in performance while drawing less power.

Virtual reality headsets have high system requirements; manufacturers recommended the GTX 970 and the R9 290X or better at the time of their release. Cards based on the Pascal microarchitecture were released in 2016. The GeForce 10 series of cards are of this generation of graphics cards. They are made using the 16 nm manufacturing process which improves upon previous microarchitectures.

Nvidia released one non-consumer card under the new Volta architecture, the Titan V. Changes from the Titan XP, Pascal's high-end card, include an increase in the number of CUDA cores, the addition of tensor cores, and HBM2. Tensor cores are designed for deep learning, while high-bandwidth memory is on-die, stacked, lower-clocked memory that offers an extremely wide memory bus. To emphasize that the Titan V is not a gaming card, Nvidia removed the "GeForce GTX" suffix it adds to consumer gaming cards.

Artificial Intelligence

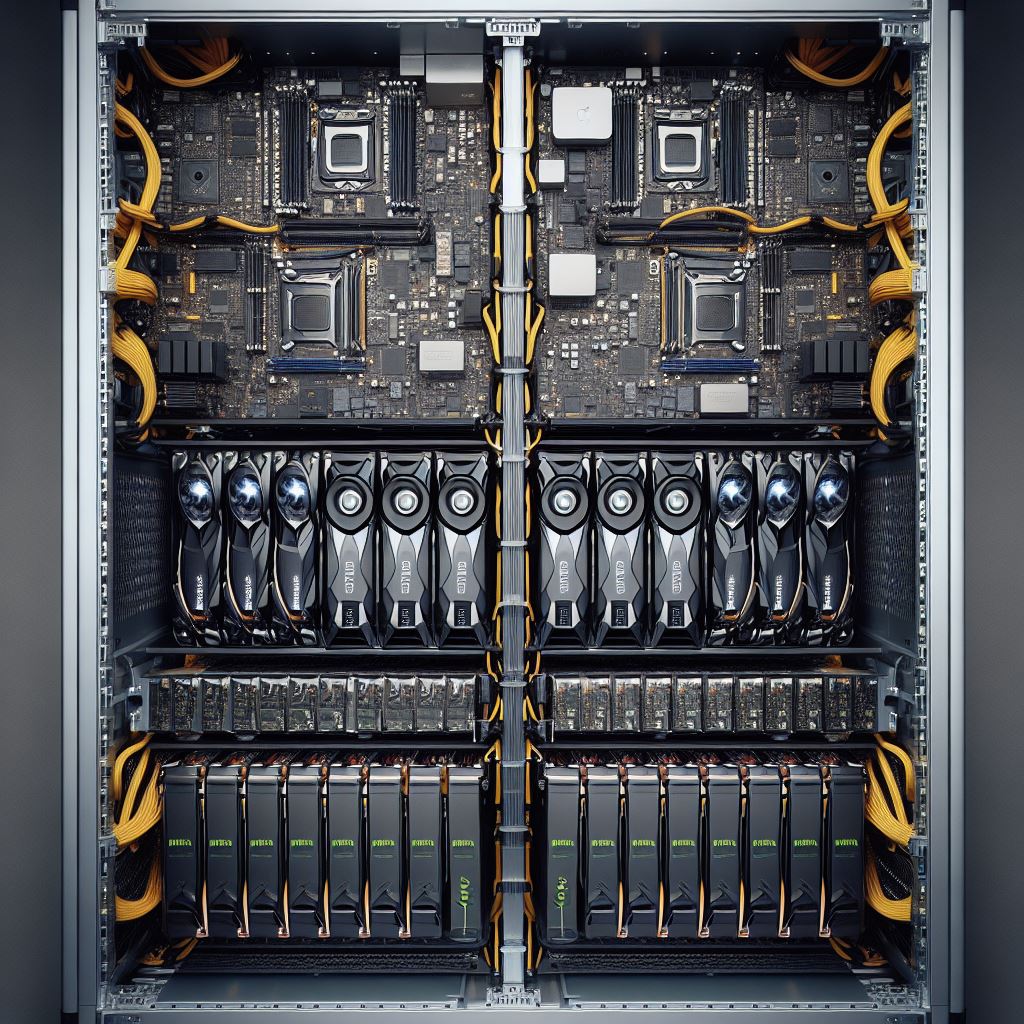

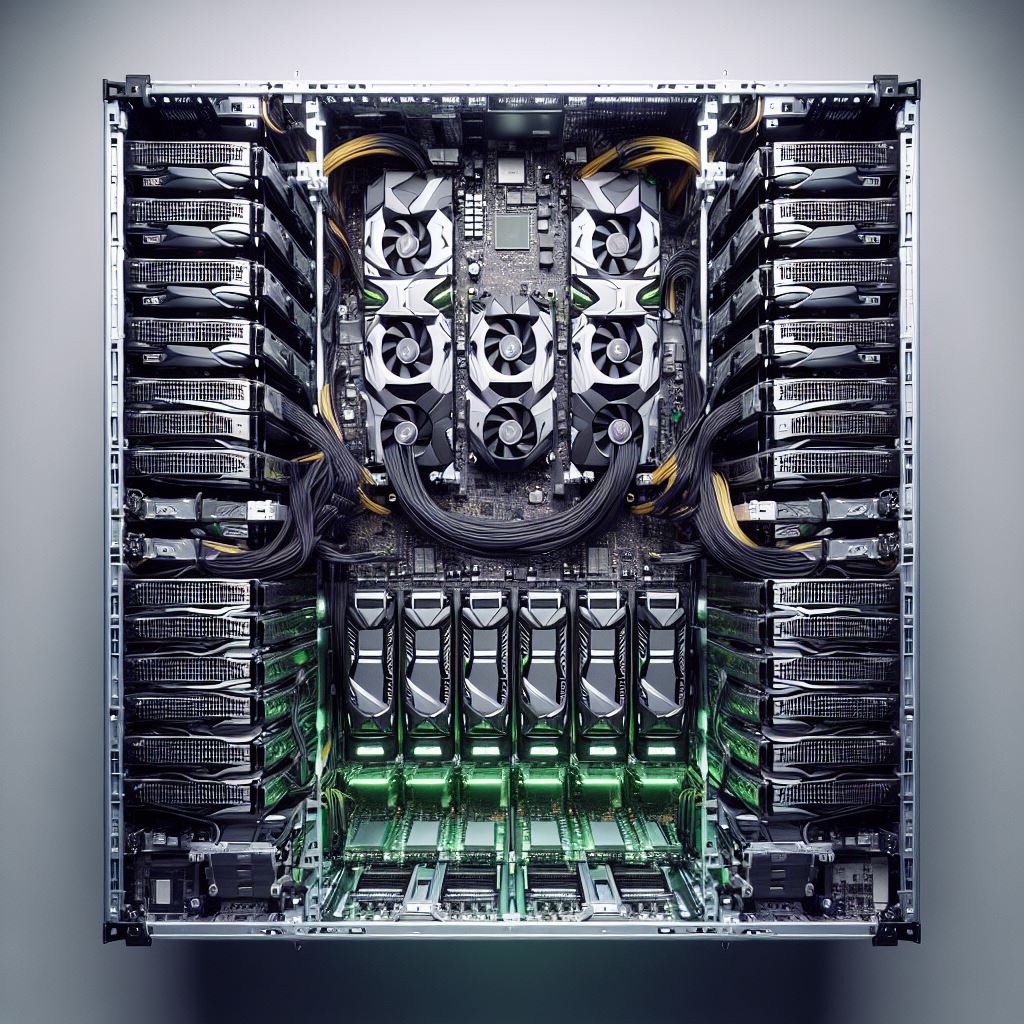

Graphics processing units (GPU) have become the foundation of artificial intelligence. Machine learning was slow, inaccurate, and inadequate for many of today's applications. The inclusion and utilization of GPUs made a remarkable difference to large neural networks. Deep learning discovered solutions for image and video processing, putting things like autonomous driving or facial recognition into mainstream technology.

The connection between GPUs and Red Hat OpenShift does not stop at data science. High-performance computing is one of the hottest trends in enterprise tech. Cloud computing creates a seamless process enabling various tasks designated for supercomputers, better than any other computing power you use, saving you time and money.

How GPU work

Let’s back up and make sure we understand how GPUs do what they do.

GPUs can dominate dozens of CPUs in performance with the help of caching and additional cores. Imagine we are attempting to process high-resolution images. For example, if one CPU takes one minute to process a single image, we would be stuck if we needed to go through nearly a million images for a video. It would take several years to run on a single CPU.

Scaling CPUs will linearly speed up the process. However, even at 100 CPUs, the process would take over a week, not to mention adding quite an expensive bill. A few GPUs, with parallel processing, can solve the problem within a day. We made impossible tasks possible with this hardware.

The evolution of GPUs

Eventually, the capabilities of GPUs expanded to include numerous processes, such as artificial intelligence, which often requires running computations on gigabytes of data. Users can easily integrate high-speed computing with simple queries to APIs and coding libraries with the help of complementary software packages for these beasts.

In November 2006, NVIDIA introduced CUDA, a parallel computing platform and programming model. This enables developers to use GPUs efficiently by leveraging the parallel compute engine in NVIDIA’s GPUs and guiding them to partition their complex problems into smaller, more manageable problems where each sub-problem is independent of the other's result.

NVIDIA further spread its roots by partnering with Red Hat OpenShift to adapt CUDA to Kubernetes, allowing customers to develop and deploy applications more efficiently. Prior to this partnership, customers interested in leveraging Kubernetes on top of GPUs had to manually write containers for CUDA and software to integrate Kubernetes with GPUs. This process was time-consuming and prone to errors. Red Hat OpenShift simplified this process by enabling the GPU operator to automatically containerize CUDA and other required software when a customer deploys OpenShift on top of a GPU server.

Red Hat OpenShift Data Science (RHODS) expanded the mission of leveraging and simplifying GPU usage for data science workflows. Now when customers start their Jupyter notebook server on RHODS, they have the option to customize the number of GPUs required for their workflow and select Pytorch and TensorFlow GPU-enabled notebook images. You may be able to select 1 or more GPUs, depending on the GPU machine pool added to your cluster. Customers have the power to use GPUs in their data mining and model processing tasks.

By the mid-2000s, NVIDIA Corporation’s graphics processing unit (GPU) hardware was widely accepted to have set the standard for digital content creation in product design, movie special effects and gaming. NVIDIA’s relentless pursuit of innovation also brought diversification. From 2008 to 2018, NVIDIA expanded into additional markets (e.g., auto) and became a major player in system-on-a-chip (SoC) technology, parallel processing and Artificial Intelligence (AI).

Take a trip through time and watch a bit of graphics history—where art, science and research come together.

Learn more about Document AI